Academies, training centres and colleges are under growing pressure to prove that their programmes lead to real work, not just new certificates.

Most reporting still stops at the same place. Enrolment. Completion. A basic destination line on a dashboard that says “employed,” “in further study” or “other.” That view misses what really matters in vocational and professional education.

Are graduates actually in practice

Are they serving clients reliably

Are they building sustainable early careers rather than drifting away after six months

For modern academies the missing piece is not more surveys or longer forms. It is the everyday conversations graduates have with real clients after they leave the classroom.

AI in chat is finally making that visible in a way that is light, safe and genuinely useful.

Why traditional outcomes data is not enough

Across English speaking systems, academies and colleges are nudged and sometimes required to publish statistics on completion and graduate destinations. In the US, community colleges and technical programmes report employment and transfer rates to state and federal agencies. In the UK, further education providers and independent training centres are judged on achievement, progression and destination measures for learners.

These are important signals, but they are blunt.

A graduate counted as “employed” might be working two hours a week in an unrelated job. Someone listed as “self employed” might have one paying client and no idea how to find more. Once that tick box is filled, the academy loses sight of what happens next.

In service professions this gap is even sharper. The quality and stability of a personal training practice, a beauty business or a small tutoring service does not live in HR databases. It lives in hundreds of small interactions with real people.

That is where AI in chat changes the picture.

The quiet operating system of early careers

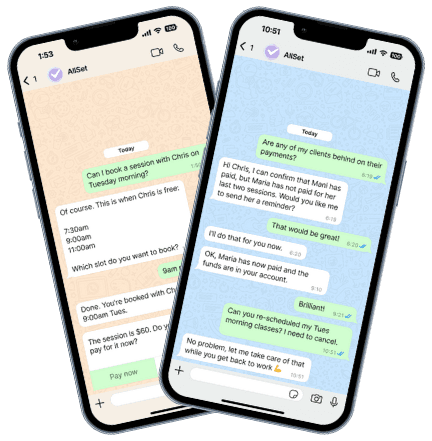

For most service-based graduates the real work does not start with a formal job contract. It starts in messaging.

They agree times with clients

They confirm sessions

They move appointments when life happens

They nudge for payments

They check in after a gap

Nearly all of that happens in WhatsApp, SMS or similar channels, not in a learning platform. It is invisible to the academy, yet it is exactly where work readiness shows up.

Until recently there was no practical way for academies to learn from this layer without infringing on privacy or adding more admin. AI in chat lets you do something different. Instead of reading messages, you can let AI help graduates run their practice and then look at the simple signals that fall out of that work.

From conversations to outcome signals

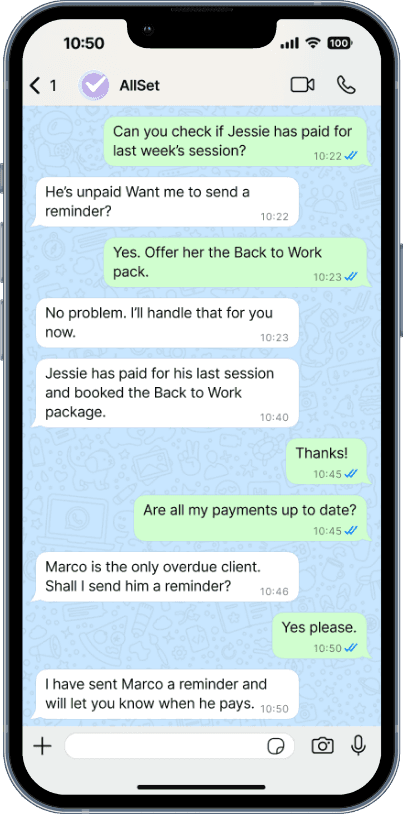

If an AI workforce is already helping graduates manage bookings, reminders and payments in chat, it can also surface light, aggregate data about how things are going.

You do not need to see who said what or read any personal details. You only need a handful of simple patterns, such as:

Activation

How many graduates are actively using their assistant to handle real client messagesSession completion

What proportion of booked sessions go ahead rather than being cancelled or forgottenRecovery of late changes

When clients cancel late, how often is the slot rebooked with someone elsePayment reliability

How often do clients pay on time once prompted What proportion of booked work is matched by completed paymentsEarly utilisation

In the first 30, 60 and 90 days after graduation, how many sessions or appointments does a typical graduate complete

Each of these signals says something concrete about early career practice. Together they tell you more than any single employment tick box.

For example:

A cohort with strong activation and completion but weak payment reliability may need more focused teaching on pricing and deposits.

A cohort with steady sessions in the first 60 days but a drop at 90 days might need support with retention, referrals or energy management.

The point is not to track individuals. It is to give academies a living picture of whether their graduates are really operating as professionals in the field.

What this means for academies and colleges

Seen this way, AI in chat is not just a teaching aid. It becomes part of your quality and outcomes toolkit. For academy leaders and boards it offers:

Better evidence for regulators and funders

You can show not only where graduates go, but how many are actively practising in the months after graduation, supported by real operational signals rather than one-off surveys.Stronger conversations with employers

When employers say “graduates are not quite work ready,” you can point to specific patterns in early client work and adjust programmes together rather than trading anecdotes.Live feedback to refine curriculum

If you see that most drop-off happens at a particular stage, you can build support into the course rather than bolting it on afterwards.A more honest alumni story

You can talk about graduate success without relying on a few flagship cases or self-reported wins. The story is grounded in everyday practice.

All of this is particularly powerful for academies training everyday service professionals in fitness, wellness, beauty, tutoring, trades, care or hospitality. These careers rely heavily on reliability, communication and basic operations. Conversation data shows exactly how graduates are performing on those dimensions.

We explore this in more depth in our AllSet use case for academies and training centres.

Designing this responsibly

Turning conversation patterns into outcome signals must be done carefully. Trust is crucial. A responsible design would include:

Opt in participation

Graduates choose to use the academy linked AI assistant for their client work. They understand what is and is not shared.Aggregate reporting only

Academies see patterns across cohorts or programmes, not individual threads. Personal identifiers stay with the graduate and their clients.Clear AI codes for learning and practice

Institutions publish simple, plain language guidance on how AI is used in study, in assessments and in the transition into work.Human oversight

Tutors, mentors and quality teams can review anonymised examples where needed, but AI is never the final decision maker on someone's competence or career.

Handled this way, AI in chat supports everyone in the system: learners, graduates, tutors and leaders.

From playbook to proof

Your existing AI strategy might already cover how students should learn with AI in the open, how to redesign assessments and how to give early career support in chat. If not, our piece on how modern academies use AI from classroom to first client is a useful starting point.

The missing step is to let that same AI layer quietly produce outcome signals that are useful outside the classroom.

Signals that reassure regulators and funders

Signals that help employers trust your graduates

Signals that help your own teams see what is really working

Certificates will still matter. So will completion and employment rates. But in practice, real credibility will come from being able to say:

“Here is how our graduates actually work with their clients in the first months. Here is the reliability, recovery and payment behaviour we can see in aggregate. Here is what we are doing about it.”

That is the kind of evidence modern academies and training centres can own with AI in chat.

Where AllSet fits

AllSet was built for exactly this layer of work. Our AI workforce helps graduates in service professions manage bookings, reminders and payments in WhatsApp. The same layer can give academies a clear, aggregate view of early career practice without extra admin.

If you want to see what conversation level outcome signals could look like for your learners, talk to us. We will walk you through how AllSet already supports service professionals in chat and how the same approach can carry your academy’s brand and outcomes into graduates’ working lives.